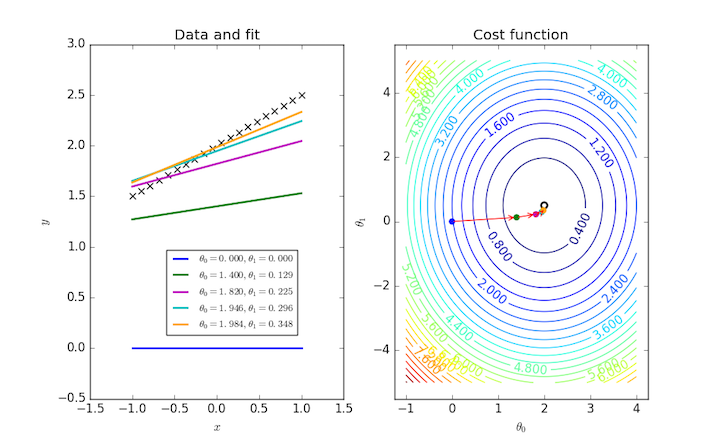

MathType - The #Gradient descent is an iterative optimization #algorithm for finding local minimums of multivariate functions. At each step, the algorithm moves in the inverse direction of the gradient, consequently reducing

Por um escritor misterioso

Last updated 22 dezembro 2024

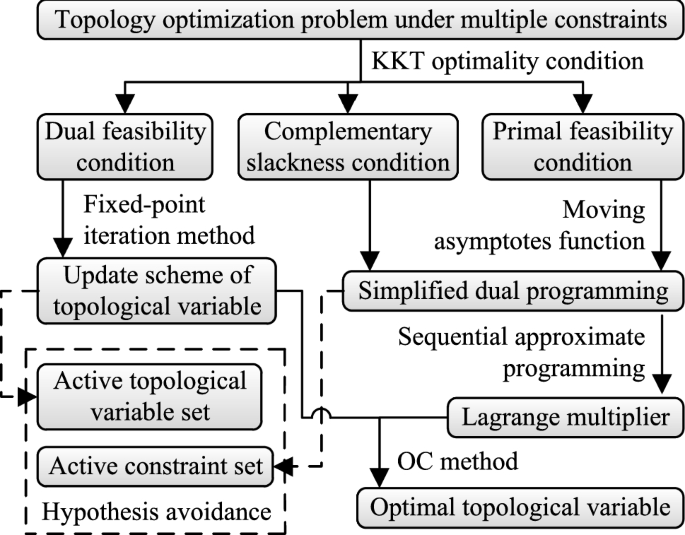

An optimality criteria method hybridized with dual programming for topology optimization under multiple constraints by moving asymptotes approximation

Gradient descent optimization algorithm.

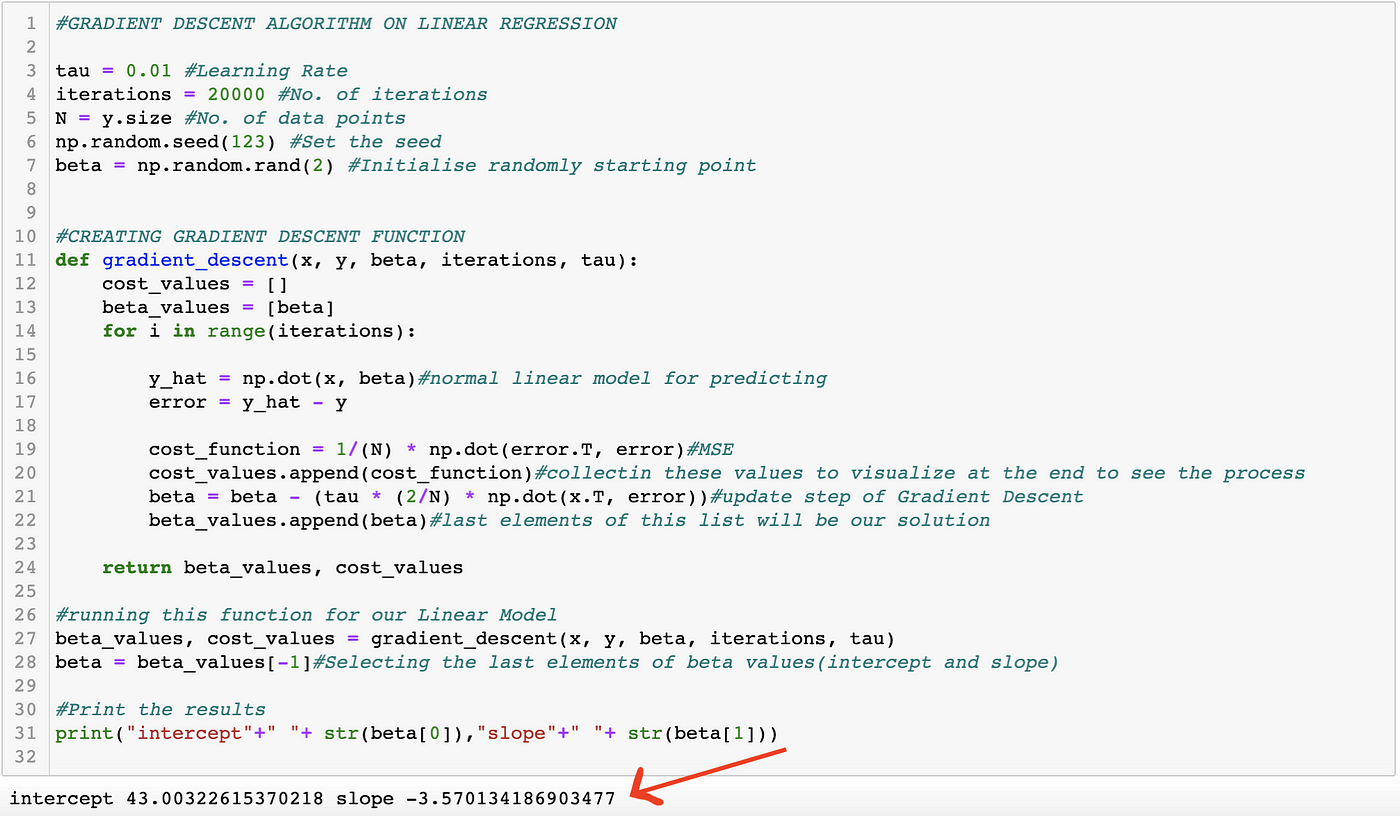

Explanation of Gradient Descent Optimization Algorithm on Linear Regression example., by Joshgun Guliyev, Analytics Vidhya

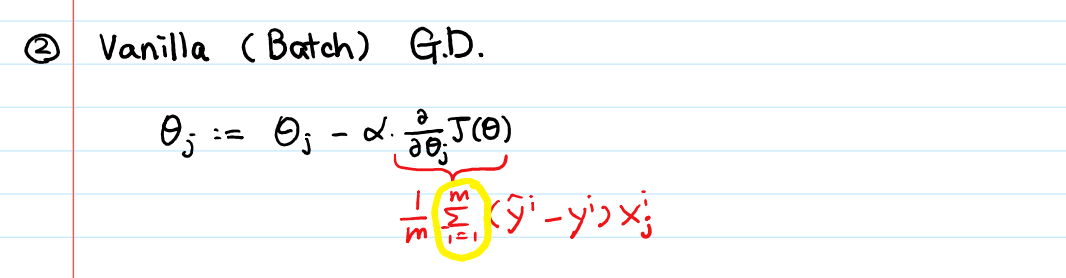

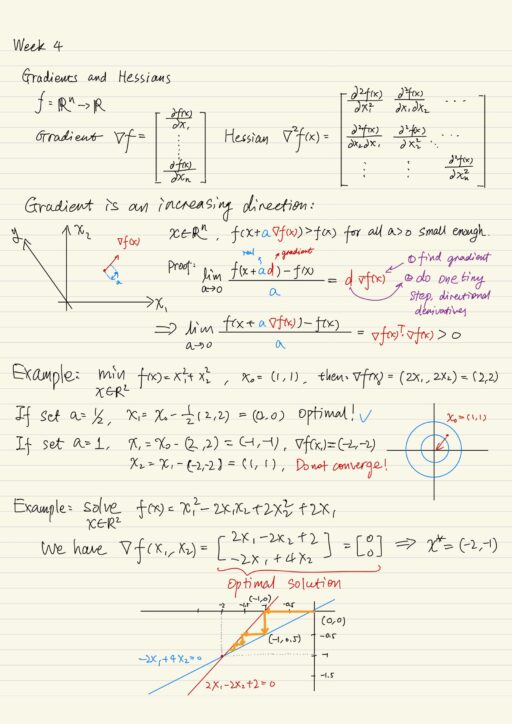

Intuition (and maths!) behind multivariate gradient descent, by Misa Ogura

Optimization Techniques used in Classical Machine Learning ft: Gradient Descent, by Manoj Hegde

Gradient Descent algorithm. How to find the minimum of a function…, by Raghunath D

Gradient Descent algorithm. How to find the minimum of a function…, by Raghunath D

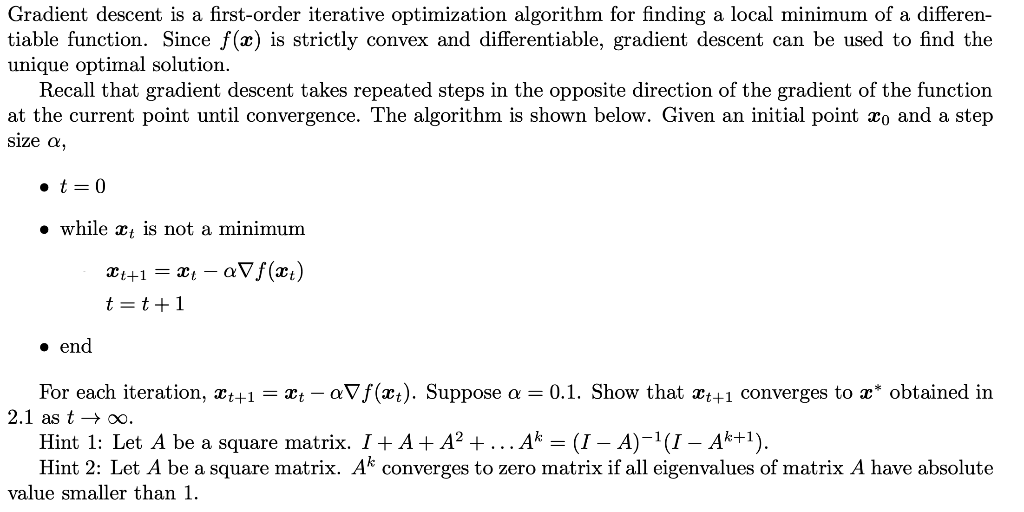

Solved Gradient descent is a first-order iterative

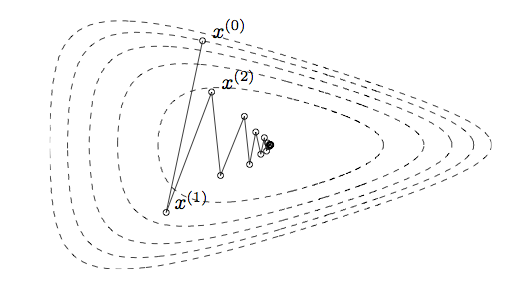

Chapter 4 Line Search Descent Methods Introduction to Mathematical Optimization

How does gradient descent algorithm work for finding the minimum of a function with two variables? - Quora

Recomendado para você

-

Gradient descent - Wikipedia22 dezembro 2024

-

Method of Steepest Descent -- from Wolfram MathWorld22 dezembro 2024

-

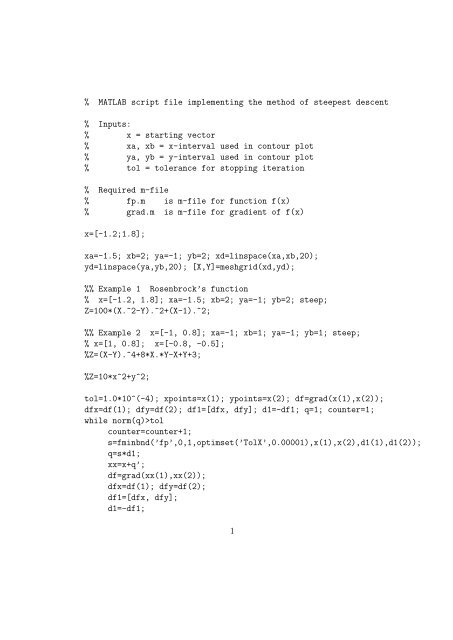

MATLAB script file implementing the method of steepest descent22 dezembro 2024

MATLAB script file implementing the method of steepest descent22 dezembro 2024 -

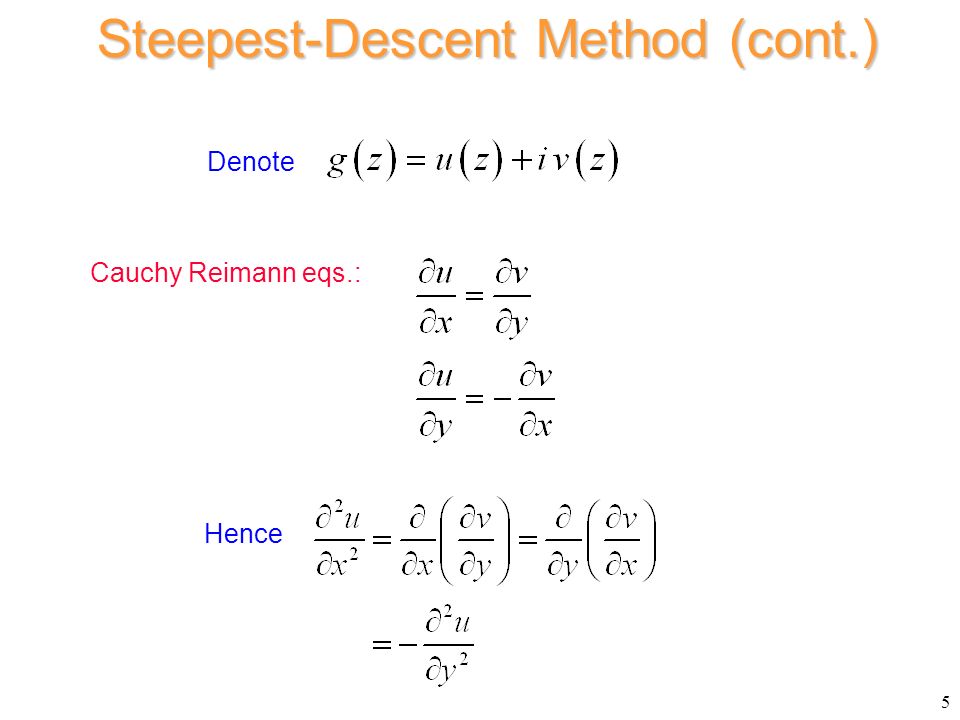

The Steepest-Descent Method - ppt video online download22 dezembro 2024

The Steepest-Descent Method - ppt video online download22 dezembro 2024 -

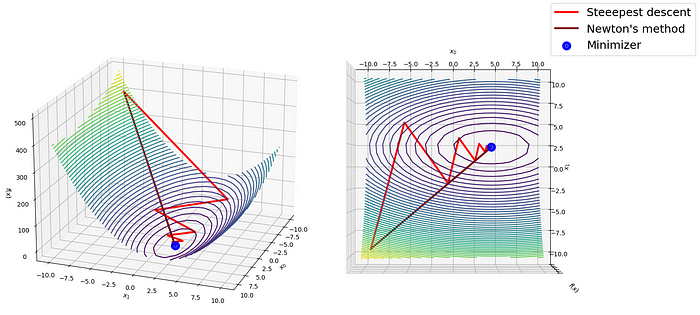

Steepest Descent and Newton's Method in Python, from Scratch: A… – Towards AI22 dezembro 2024

Steepest Descent and Newton's Method in Python, from Scratch: A… – Towards AI22 dezembro 2024 -

Stochastic Gradient Descent Algorithm With Python and NumPy – Real Python22 dezembro 2024

Stochastic Gradient Descent Algorithm With Python and NumPy – Real Python22 dezembro 2024 -

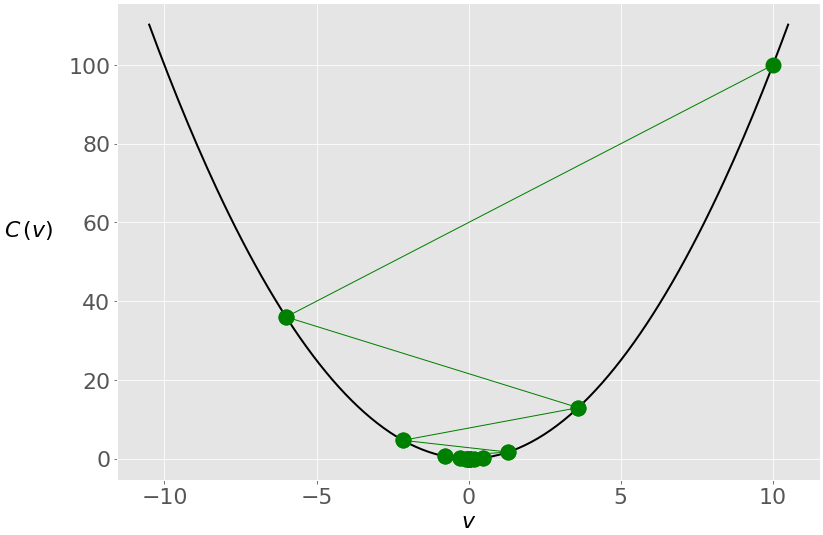

Visualizing the gradient descent method22 dezembro 2024

Visualizing the gradient descent method22 dezembro 2024 -

gradient-descent-backtracking.png22 dezembro 2024

gradient-descent-backtracking.png22 dezembro 2024 -

Non-Linear Programming: Gradient Descent and Newton's Method - 🚀22 dezembro 2024

Non-Linear Programming: Gradient Descent and Newton's Method - 🚀22 dezembro 2024 -

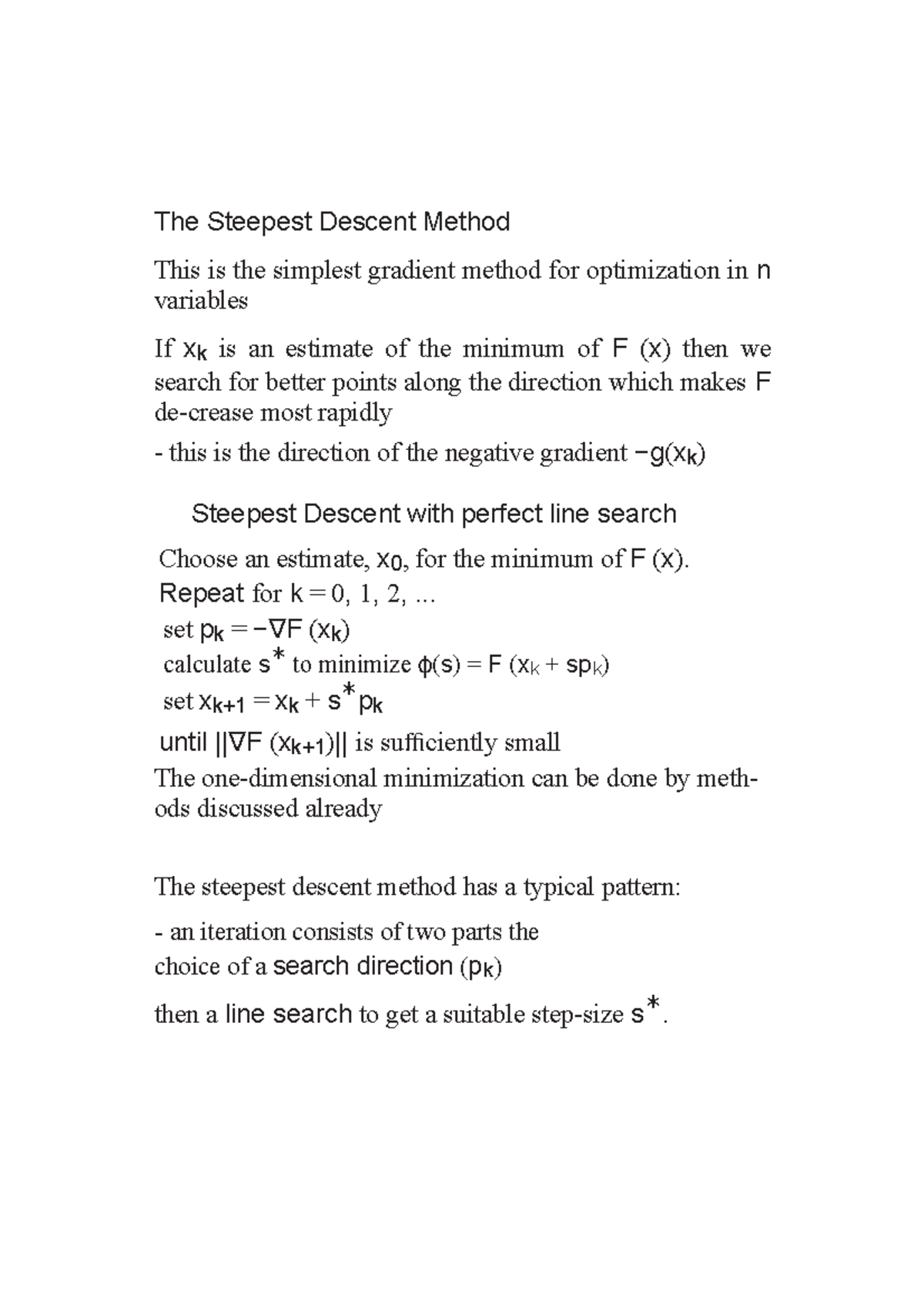

The Steepest Descent Method - Summary - The Steepest Descent Method This is the simplest gradient - Studocu22 dezembro 2024

The Steepest Descent Method - Summary - The Steepest Descent Method This is the simplest gradient - Studocu22 dezembro 2024

você pode gostar

-

Boy Cartoon png download - 800*600 - Free Transparent Astro Boy22 dezembro 2024

Boy Cartoon png download - 800*600 - Free Transparent Astro Boy22 dezembro 2024 -

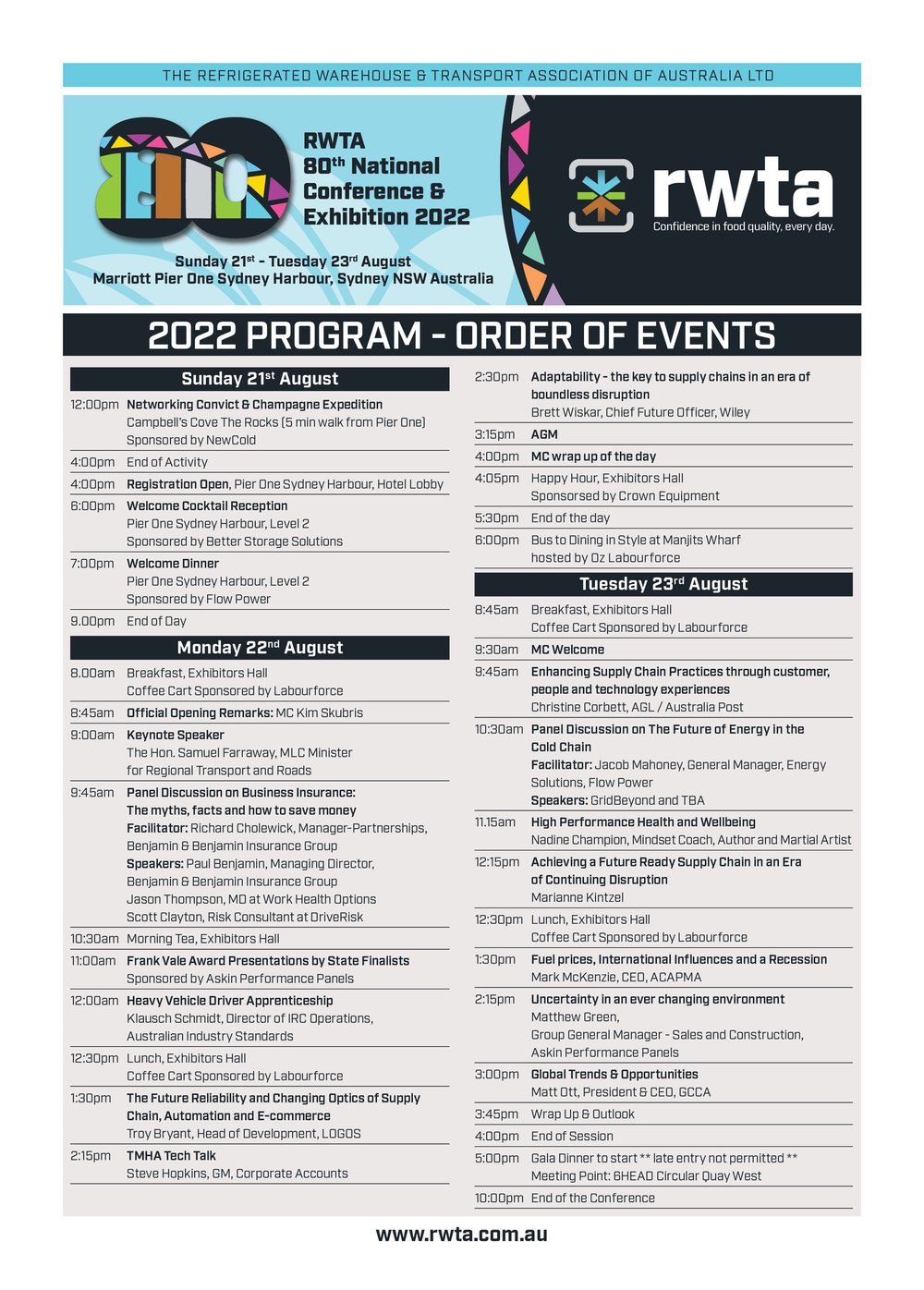

Conference 2022 — RWTA22 dezembro 2024

Conference 2022 — RWTA22 dezembro 2024 -

IHOP Orlando - um café da manhã americano22 dezembro 2024

IHOP Orlando - um café da manhã americano22 dezembro 2024 -

Highlights from Hadjuk Split vs. PAOK - PAOKFC22 dezembro 2024

Highlights from Hadjuk Split vs. PAOK - PAOKFC22 dezembro 2024 -

Jogo de tabuleiro de xadrez Jogar, xadrez, jogo, criança, amizade22 dezembro 2024

Jogo de tabuleiro de xadrez Jogar, xadrez, jogo, criança, amizade22 dezembro 2024 -

Anime Profile Boy posted by Zoey Sellers, cute boy profile HD22 dezembro 2024

Anime Profile Boy posted by Zoey Sellers, cute boy profile HD22 dezembro 2024 -

Nintendo Switch - Aqui ficam alguns dos títulos para a Nintendo Switch disponíveis em 2021 e 2022. Já escolheram os que vão jogar?22 dezembro 2024

-

Frases De Indiretas Do Bem: Gente que tem o cabelo bonito.22 dezembro 2024

Frases De Indiretas Do Bem: Gente que tem o cabelo bonito.22 dezembro 2024 -

MASH VS THE EVIL EYE - Mashle: Magic and Muscles Episódio 9 REACT22 dezembro 2024

MASH VS THE EVIL EYE - Mashle: Magic and Muscles Episódio 9 REACT22 dezembro 2024 -

Game of Thrones Season 1 Episode 10: Fire and Blood Photos - TV Fanatic22 dezembro 2024

Game of Thrones Season 1 Episode 10: Fire and Blood Photos - TV Fanatic22 dezembro 2024